In recent years, AI has evolved from simple chatbots into complex agents capable of planning, reasoning, and performing tasks autonomously. But to transform these AI agents into powerful, real-world automation tools, they need to interact with the outside world through clear and scalable channels. That’s where n8n and the Model Context Protocol (MCP) come into play.

This blog will explore how these components work together:

- What is an AI Agent?

- What is MCP and why it matters?

- What is n8n and why it fits so well in this setup?

- How to build a working automation flow using these tools.

- Components of MCP and how they operate

- Why MCP + n8n is better than using AI Agent in n8n directly

List blog-related:

A Beginner’s Guide to Model Context Protocol (MCP): Connecting AI to the Real World

🤖 What is an AI Agent?

An AI Agent is not just a model like GPT or Claude — it’s an intelligent system that can:

- Perceive a situation

- Decide on a plan of action

- Execute a task (by calling APIs, running scripts, sending emails, etc.)

AI agents are typically built on top of LLMs and enriched with tools like vector stores, memory, long-term planning, etc.

How AI Agents differ from regular LLMs

| Feature | Regular LLM (e.g. GPT) | AI Agent |

|---|---|---|

| Purpose | Text generation, Q&A | Decision making + action execution |

| Autonomy | Stateless, reactive | Stateful, proactive |

| Tools | Usually none | Access to tools (APIs, databases, workflows) |

| Planning | Limited | Multi-step planning and execution |

So while a regular model can answer your questions, an AI agent can get things done. It can book a meeting, send an email, fetch analytics, or even deploy code — depending on the tools it’s integrated with.

📦 What is MCP (Model Context Protocol)?

MCP is a standard protocol or “common language” that defines how an AI model should describe actions it wants to perform.

Why MCP?

Without MCP:

- Each integration is custom and hardcoded

- AI and the environment are tightly coupled

With MCP:

- The AI outputs actions in a standardized format (like JSON)

- External systems like n8n, Zapier, or your own server know how to interpret and act on those instructions

Components of MCP:

MCP typically consists of two key components:

- MCP Client (usually embedded in the AI Agent)

- Responsible for generating commands in the MCP JSON format.

- This client wraps the output of the LLM in a machine-readable schema.

- MCP Server (execution layer)

- Interprets MCP commands and routes them to execution engines like n8n.

- Acts as a middleware layer, can include validation, logging, and routing.

This separation allows developers to update execution logic or models independently, creating highly maintainable systems.

Example:

{

"type": "http-request",

"method": "POST",

"url": "https://api.example.com/send-email",

"headers": {

"Authorization": "Bearer xyz"

},

"body": {

"to": "[email protected]",

"subject": "Welcome!",

"message": "Thanks for signing up."

}

}

The AI doesn’t care how the email is sent — only what needs to be done. The execution engine (like n8n) takes over.

🧰 What is n8n?

n8n is an open-source automation platform. It allows you to:

- Build workflows using a no-code/low-code interface

- Trigger actions from webhooks, cron jobs, or other events

- Connect over 300+ services

Why is it great for AI Agents?

- Has HTTP nodes, database nodes, email nodes, etc.

- Can easily be triggered by a webhook or a REST API call

- Can interpret MCP-style actions and route them to the appropriate logic

❓But n8n already supports OpenAI and AI modules — so why MCP?

Yes, n8n can connect directly to OpenAI or other LLMs. But that means:

- The AI logic and workflow structure are tightly bound

- You’d need different workflows per AI model or provider

- You lose flexibility and reusability

With MCP:

- You separate AI reasoning from workflow execution

- Your workflows become universal — they just receive MCP commands and execute

- You can swap LLMs (OpenAI, Claude, local models) without changing your automation logic

This decoupling is key to building scalable, maintainable AI systems.

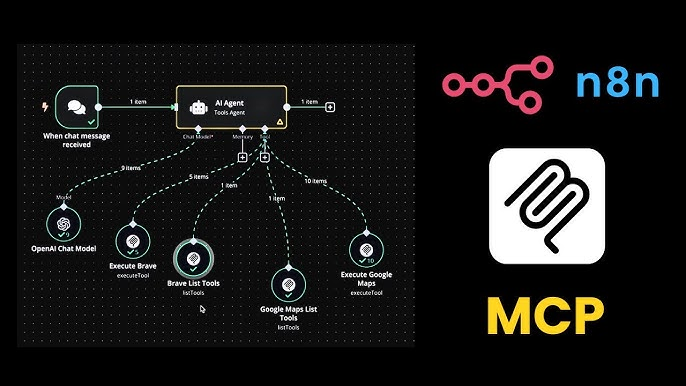

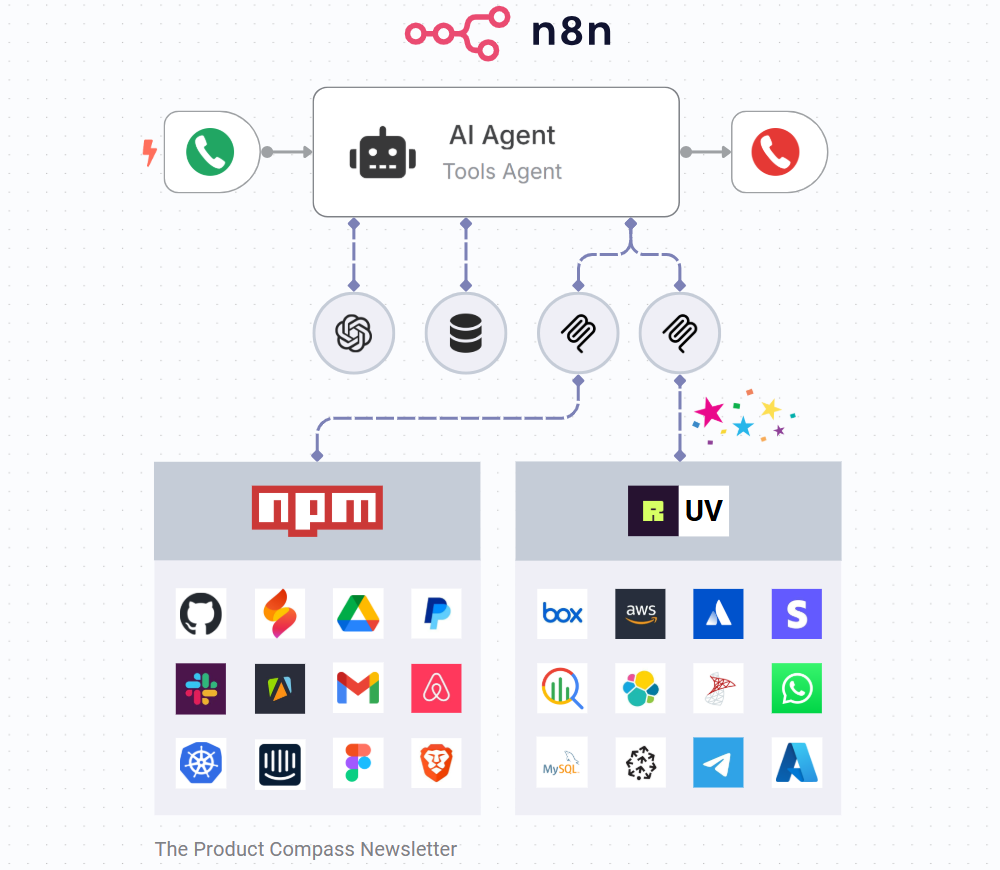

⚙️ Putting It All Together: AI + MCP + n8n

Let’s analyze the following realistic workflow architecture based on the diagram:

Flow Description:

- User input or trigger (voice or text)

- AI Agent receives the request and performs reasoning

- AI Agent consults:

- LLMs for language understanding

- Vector DB for memory/context

- Internal tools or services for task decisioning

- AI Agent uses the MCP Client to produce structured JSON command

- MCP Server receives and routes the instruction

- n8n receives and processes the request, using its rich ecosystem of nodes:

- Communication (Slack, Email, Telegram)

- Cloud services (AWS, Azure, GCP)

- Databases (MySQL, BigQuery, etc.)

- Business tools (Stripe, Notion, Airtable)

Why this combination is powerful:

- AI can reason about what needs to be done

- MCP provides a universal format to describe the job

- n8n connects and executes across tools with no code

💡 Why This Architecture Wins

| Feature | Without MCP | With MCP |

|---|---|---|

| Reusability | Low | High |

| Modularity | Poor | Excellent |

| Interoperability | Tool-specific | Multi-tool compatible |

| Debuggability | Hard | Structured |

🧪 Sample n8n Workflow (Pseudo-code)

- node: Webhook Trigger

url: /webhook/send-welcome

- node: Set Variables

input:

name: {{ $json.name }}

email: {{ $json.email }}

- node: Send Email

service: Gmail

to: {{ $json.email }}

subject: "Welcome, {{ $json.name }}!"

message: "We're excited to have you on board."

🚀 Conclusion

In the world of automation, AI agents alone are not enough. By combining them with n8n and the Model Context Protocol, we:

- Separate the thinking (AI) from the doing (n8n)

- Enable scalable and interoperable automation

- Future-proof your stack against changes in tools

This architecture is not just a trend — it’s a powerful pattern for the future of automation.

Leave a Reply