Hey there, If you’ve been dipping your toes into the world of AI, you’ve probably heard about how powerful large language models (LLMs) like Claude or GPT can be. They can write code, answer questions, and even chat like a friend. But here’s the catch: on their own, these models are like super-smart brains without arms or legs—they can’t interact with the real world, fetch live data, or connect to the tools we use every day. That’s where the Model Context Protocol (MCP) comes in, and today, I’m going to break it down for you in a way that’s easy to grasp. Let’s dive in!

List blog-related:

Building an Automation Powerhouse: AI Agents, MCP, and n8n

What is Model Context Protocol (MCP)?

Imagine you have a super-smart assistant (an AI model) who’s stuck in a bubble. It knows a ton of stuff, but it can’t check your emails, browse GitHub, or look up the weather unless you manually feed it that info. MCP is like a universal USB cable that connects your AI assistant to the outside world—your apps, databases, and tools—so it can see and do more.

In technical terms, MCP is an open standard created by Anthropic (the folks behind Claude) to help AI models talk to external systems in a consistent, organized way. It’s a protocol (a set of rules) that makes it easier for developers to plug AI into real-world data and actions without reinventing the wheel every time. Think of it as a bridge that lets your AI go from just thinking to doing.

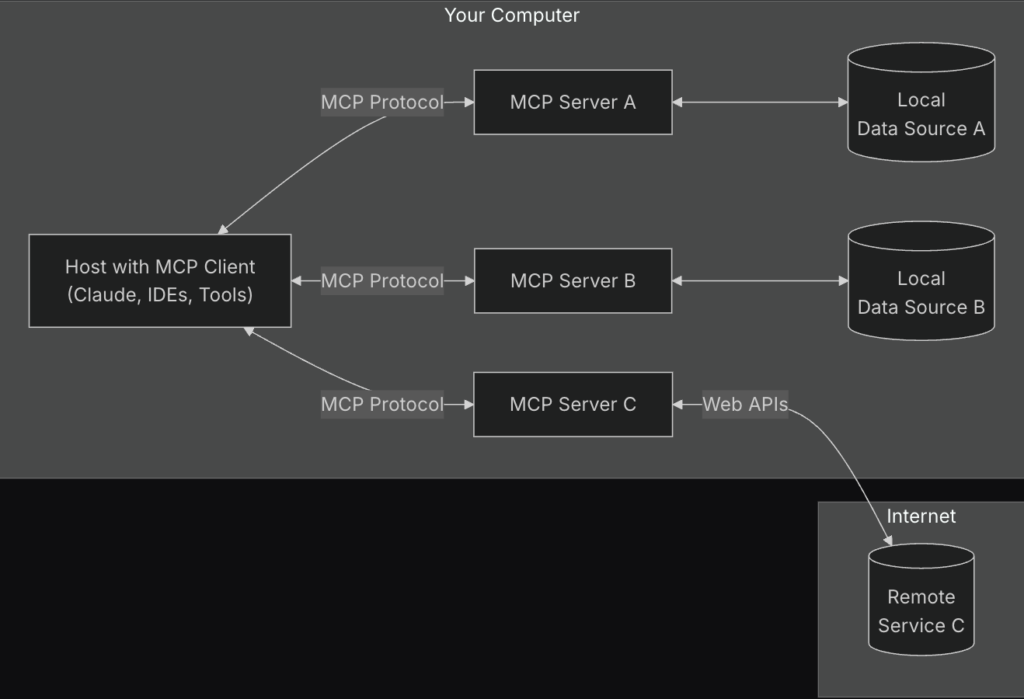

How Does MCP Work? The Architecture Made Simple

MCP uses a client-server architecture, which might sound fancy, but it’s just a way of organizing how things talk to each other. Here’s the breakdown:

- MCP Host: This is the main app where your AI lives—like an IDE (e.g., VS Code), a desktop tool (e.g., Claude Desktop), or even a custom app you build. It’s the “home base” for your AI assistant.

- MCP Client: This is the part inside the host that reaches out to connect with external systems. Think of it as the AI’s messenger—it sends requests and brings back answers.

- MCP Server: These are lightweight programs that connect to specific data or tools—like your GitHub repo, a database, or a weather API. Each server is like a little helper that gives the AI access to one specific thing.

- Transport Layer: This is the “highway” that carries messages between the client and server. MCP uses something called JSON-RPC (a simple way to send data) over channels like standard input/output (stdio) or HTTP.

Here’s a simple picture of how it fits together:

[Your App: MCP Host]

|

[MCP Client] ----> [MCP Server: GitHub]

| --> [MCP Server: Database]

| --> [MCP Server: Weather API]The host talks to the AI model, the client talks to the servers, and the servers talk to the real-world stuff. Easy, right?

A Simple Example: Coding with MCP

Let’s say you’re working in an IDE, and you ask your AI assistant: “Write a function to fetch the latest commits from my GitHub repo.” Without MCP, the AI might just guess or give you a generic answer. With MCP, here’s what happens:

- You type your request into the IDE (the MCP Host).

- The MCP Client inside the IDE sends a message to an MCP Server connected to GitHub.

- The GitHub MCP Server fetches the latest commits (using your credentials securely).

- The server sends the commit data back to the client.

- The AI in the IDE uses that data to write a perfect function tailored to your repo.

The result? You get code that actually works with your project, not some made-up example. Here’s what the AI might spit out:

def get_latest_commits(repo_name):

commits = github_api.fetch_commits(repo_name) # Real data from MCP!

for commit in commits[:5]:

print(f"{commit['author']}: {commit['message']}")Real-World Use Case: AI-Powered Customer Support

Now, let’s zoom out to a real-world scenario. Imagine you’re building a chatbot for a company’s customer support team. Customers ask things like, “Where’s my order?” or “What’s the return policy?” Without MCP, your chatbot would need custom code to connect to the company’s order database, policy docs, and maybe even an email system. That’s a lot of work!

With MCP, you can set it up like this:

- MCP Host: The chatbot app.

- MCP Servers:

- One server connects to the order database (e.g., “Order #123 is shipped!”).

- Another server pulls the latest return policy from a Google Drive doc.

- A third server links to an email system to send follow-ups.

When a customer asks, “Where’s my order?”:

- The chatbot (host) sends the question to the AI.

- The MCP Client asks the order database server for the status of Order #123.

- The server replies with “Shipped on March 30, 2025.”

- The AI crafts a friendly response: “Your order #123 shipped on March 30, 2025—expect it soon!”

The best part? If the company adds a new tool (like a tracking API), you just plug in a new MCP Server—no need to rewrite the whole chatbot. It’s scalable and reusable!

Wrapping Up

MCP is like giving your AI assistant a toolbox and a map to the real world. It’s all about connecting smart models to the data and actions they need to shine. Whether you’re building a coding helper, a chatbot, or something wild we haven’t thought of yet, MCP makes it easier to bring your ideas to life.

So, next time you’re playing with an AI model, think: “What if it could talk to my database or my favorite API?” With MCP, it can—and you can build it! Start small—maybe try connecting an MCP Server to a simple file or API—and see where it takes you. Happy coding, and welcome to the future of AI!

Leave a Reply